Who set up the world's first website? When was it? Any idea how large the first commercial microwave oven was? Did you know two inventors, working independently, came up with near-identical integrated circuits at about the same time? Who were they? Read on to find out the answers and the backstories of a few other inventions

Connecting the world

In 1969, the Internet took its first baby steps as Arpanet, a network created by the United States Defense Advanced Research Projects Agency (DARPA). It connected universities and research centres, but its use was restricted to a few million people.

Then in the 1990s, the technology made a quantum jump. Tim Berners-Lee, an English software consultant wrote a program called 'Enquire’, named after 'Enquire Within Upon Everything', a Victorian-age encyclopaedia he had used as a child. He was working for CERN in Switzerland at the time and wanted to organise all his work so that others could access it easily through their computers. He developed a language coding system called HTML or HyperText Markup Language, a location unique to every web page called URL (Universal Resource Locator) and a set of protocols or rules (HTTP or Hyper Text Transfer Protocols) that allowed these pages to be linked together on the Internet. Berners-Lee is credited with setting up the world's first website in 1991.

Berners-Lee did not earn any money from his inventions. However, others such as Marc Andreessen, who co-founded Netscape in 1994, became one of the Web's first millionaires.

It began with a bar of chocolate!

The discovery that microwaves could cook food super quickly was purely accidental. In 1945, American physicist Percy Spencer was testing a magnetron tube engineered to produce very short radio waves for radar systems, when the chocolate bar in his pocket melted. Puzzled that he hadn't felt the heat, Spencer placed popcorn kernel near the tube, and in no time, the popcorn began crackling. His company Raytheon developed this idea further and in 1947, the first commercial microwave oven was introduced - all of 1.5 metres high and weighing 340 kg!

Since it was too expensive to mass-produce, Raytheon went back to the drawing board and in the 1950s, came out with a microwave the size of a small refrigerator. A few years later came the first regular-sized oven-far cheaper and smaller than the previous models.

Chip-sized marvel

A microchip, often called a "chip" or an integrated circuit (IC), is what makes modern computers more compact and faster. Rarely larger than 5 cm in size and manufactured from a semi-conducting material, a chip contains intricate electronic circuits.

Two separate inventors, working independently, invented near-identical integrated circuits at about the same time! In the late 1950s, both American engineer Jack Kilby (Texas Instruments) and research engineer Robert Noyce (Fairchild Semiconductor Corporation) were working on the same problem- how to pack in the maximum electrical components in minimal space. It occurred to them that all parts of a circuit, not just the transistor, could be made on a single chip of silicon, making it smaller and much easier to produce.

In 1959, both the engineers applied for patents, and instead of battling it out, decided to cooperate to improve chip technology. In 1961, Fairchild Semiconductor Corporation launched the first commercially available integrated circuit. This IC had barely five components and was the size of a small finger. All computers began using chips, and chips also helped create the first electronic portable calculators. Today an IC, smaller than a coin, can hold millions of transistors!

Keeping pace with the heart

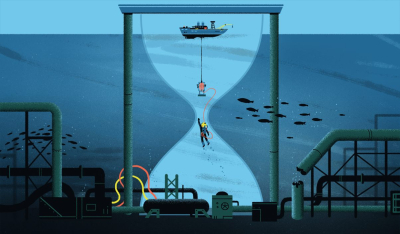

Pacemakers send out electrical signals to the heart to regulate erratic heartbeats. Powered by electricity, early pacemakers were as big as televisions, with a single wire or 'lead' being implanted in the patient's heart. A patient could move only as far as the wire would let them and electricity breakdowns were a major cause of worry!

In 1958, a Swedish surgeon and an engineer came together to invent the first battery-powered external pacemaker. Around the same time, American electrical engineer Wilson Greatbatch was creating a machine to record heartbeats. Quite by accident, he realised that by making some changes, he was getting a steady electric pulse from the small device. After two years of research, Greatbatch unveiled the world's first successful implantable pacemaker that could surgically be inserted under the skin of the patient's chest.

Picture Credit : Google