The Atomichron, unveiled on October 3, 1956, was the world's first commercial atomic clock. At a time when timekeeping is more accurate than ever before.

In his work of fiction The Time Keeper, American author Mitch Albom has one of his characters say that "man will count all his days, and then smaller segments of the day, and then smaller still- until the counting consumes him, and the wonder of the world he has been given is lost. While the last part of the statement is rather too deep, and well beyond the scope of this column, there might be some truth with respect to the counting consuming us.

Comes down to counting When we started, we looked up at the sun and the moon to get a sense of time. We picked up stones, collected water, and were able to tell time even better. And now, we have come to a stage where the best of our clocks are so precise that it would take around 30 billion years for it to lose even one second.

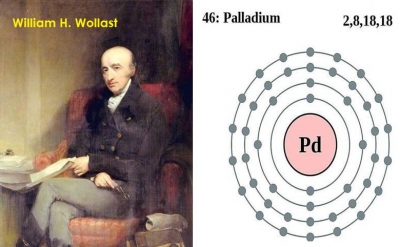

And yet, at the heart of it, the fundamental process remains the same as we count a periodic phenomenon. In a grandfather clock, the pendulum swings back and forth. In a wristwatch, an electric current ensures that a tuning fork-shaped piece of quartz oscillates. And when it comes to atomic clocks, we use certain resonance frequencies of atoms and count the periodic swings of electrons as they jump between energy levels.

What are atomic clocks? The best of our clocks, by the way, are atomic clocks. As we learned more of the atom's secrets, we were able to build practical applications, including these clocks.

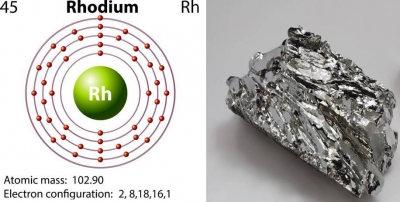

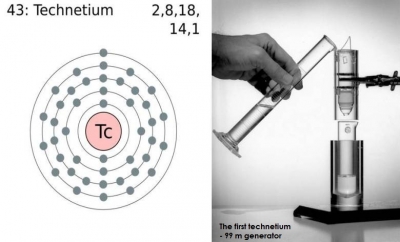

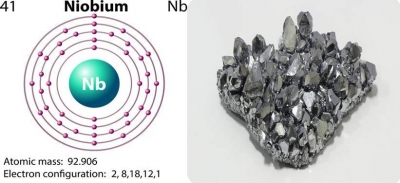

We now know that an atom is made up of a nucleus - consisting of protons and neutrons- that is surrounded by electrons. While the number of electrons in an element can vary, they occupy discrete energy levels, or orbits.

Electrons can jump to higher orbits around the nucleus on receiving a jolt of energy. As an individual element responds only to a very specific frequency to make this jump, this frequency can be measured by scientists to measure time very accurately.

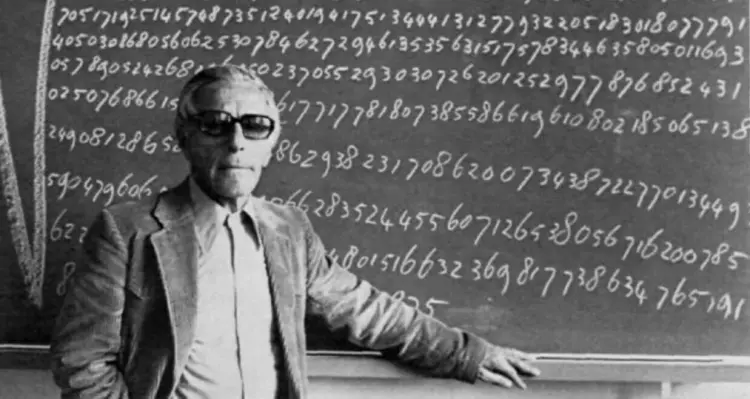

Been around since 1950s

By the mid 1950s, atomic clocks with caesium atoms that were accurate enough to be used as time standards had been built.

The Massachusetts Institute of Technology Research of Electronics developed the first commercial atomic clocks around the same time, and these were manufactured by the National Company, Inc. (NATCO) of Malden, Massachusetts.

Initially, the atomic beam clocks that NATCO were building were called just that: ABC. By 1955, the prototypes bore the working name National Atomic Frequency Standard (NAFS). As this acronym was clearly not pleasing to the ear, there was a need for a better name to market the first practical commercial atomic clock.

Quantum electronics equipment

They came up with the name Atomichron, which NATCO then made its generic trademark for all their atomic clocks. In a well publicised event at the Overseas Press Club in New York, the Atomichron was unveiled to the world on October 3, 1956.

The first commercial atomic clock was indeed the first piece of quantum electronics equipment made available to the public. In the years that followed, 50 Atomichrons were made and sold to military agencies, government agencies, and universities.

Defining a second

By 1967, the official definition of a second by the International System of Units (SI) was based on caesium. This meant that the internationally accepted unit of time was now defined in terms of movements inside atoms of caesium.

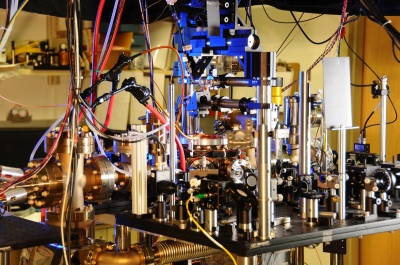

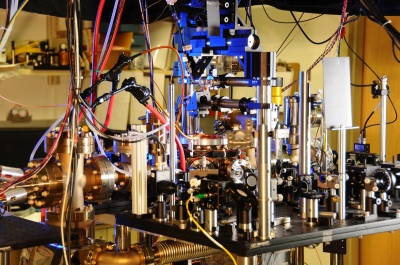

Atomic clocks, however, aren't going to come home soon. At about the size of a wardrobe, it consists of interwoven cables, wires, and steel structures that are connected to a vacuum chamber that holds the atoms.

These clocks, however, are already in use everywhere around us. Be it satellite navigation, online communication, or even timed races in the Olympics, atomic clocks are in action. The best of our atomic clocks, as you might guessed, are employed in research and experiments to further our understanding of the universe around us.

Picture Credit : Google